App Store Holiday Schedule 2020

Posted on November 23rd, 2020

When is the App Store Holiday Schedule 2020? Learn about the dates of this year's shutdown and how to prepare.

Google has announced a change to A/B testing experiment metrics on installations. These metrics can provide new insights for developers to understand how well each variant is converting and retaining users. Using the information gleamed from the experiments, developers and marketers can test and improve their App Store Optimization strategy and improve their app’s performance.

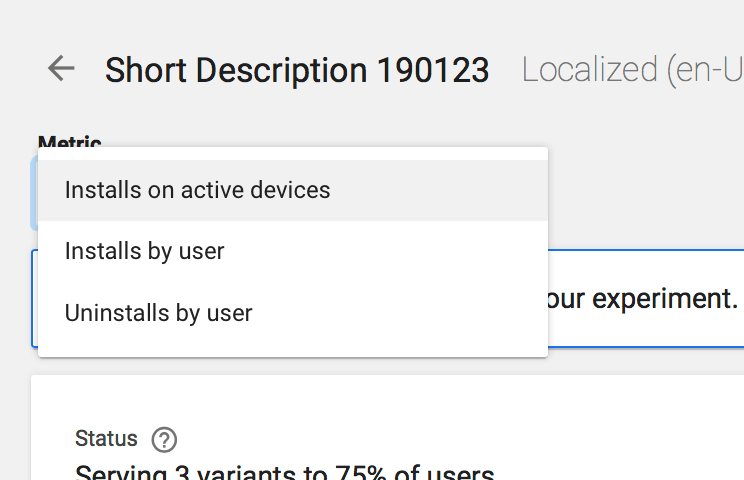

The metrics are replacing the previous statistics, including “Active Devices,” “Installs by User” and “Uninstalls by User.” In their place, developers can now get hour-by-hour updates on first time installations and how many users uninstall within the first day.

Previous Metrics

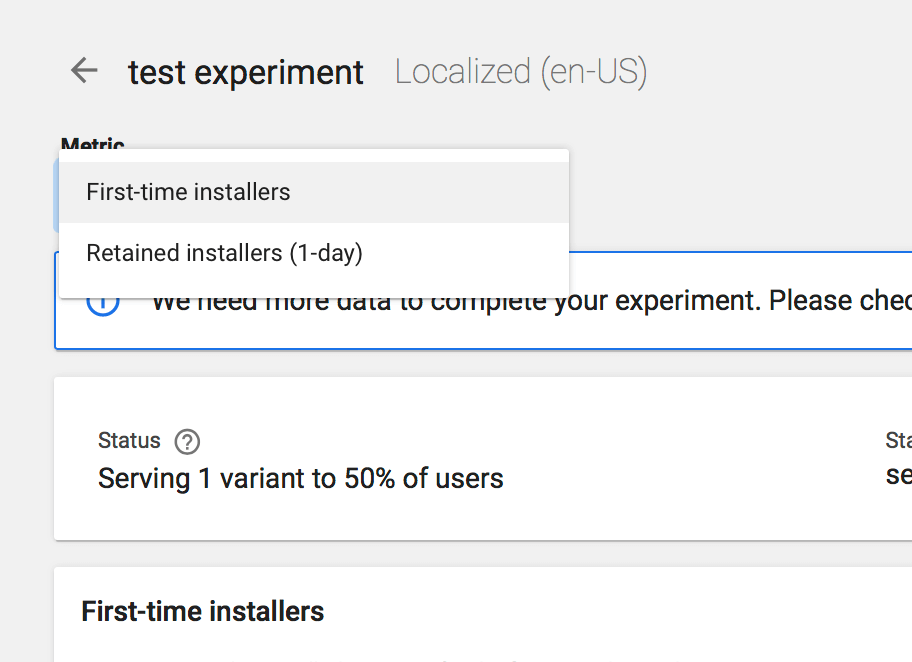

New Metrics

Perhaps the most important change is the shift from daily metrics to hourly. Developers can now get up-to-date information on the hour, rather than each day. This enables them to see the most accurate data when they need it.

Information that is outdated, even by a day, can miss recent but important changes and insights. The change to hourly will help developers access accurate metrics on demand.

The first new metric is for “First Time Installers.” This is a change from the previous metric of “Installs on Active Devices” and “Installs by User” and shows users who are installing the app for the first time. This is different from overall installations, since often times users will re-install an app they’ve downloaded in the past.

By tracking the first-time installs, you can determine how well your app is converting new users. This is essential for A/B testing experiments, as the variants that convert users better are the ones that should be incorporated into the final design.

In addition to the First Time Installers, the Developer Console also tracks how many of the new users keep the app for at least one day following installation. This replaces “Uninstalls by User,” instead focusing on new users who uninstall within the first day rather than users overall.

The retention rate is important for an app’s ranking within the store – if too many users uninstall it, that will signal that the app is fraudulent, buggy or otherwise poor.

If the “1-Day Retained Installer” rate is low overall, developers should test and examine their app further to determine what’s driving users away. If the rate is only low for a certain A/B testing set, that could indicate that the version being tested is misleading to users. They may install the app expecting a certain focus, theme or features based on the creatives and description, only to find that the app itself is different than they’ve been led to believe.

Even if the creative set converts well, it doesn’t matter if users uninstall the app within the first day of trying it.

These metrics provide valuable insights into how well the tested variants are performing, which can shape the app’s ASO. Being able to see new metrics each hour, rather than each day, enables a more in-depth and on-demand look at an app’s performance. First time installer rate is an important part of an app’s performance statistics, while retained installers are essential for user acquisition data.

A/B testing is important for determining how variants of an app store listing perform in an active environment, as it provides real performance data that can be analyzed and compared to other tested variants. The more information developers can gain from the tests, the better the end results will be.

Understanding how well each variant converts first-time installers will help developers improve their performance and discover what brings new users in the best. By ignoring re-installations from previous users who are trying the app again, they can draw better information on exactly how good a first impression the app page makes.

Knowing how many new users keep the app after the first day helps developers learn if there are any performance issues. It can also help determine if the app store listing is aligned with what the app offers, so they can tell if the listing is appealing to users who will enjoy the app.

Testing, revising and refining all the aspects of the app page is vital to App Store Optimization. With the A/B testing experiment metrics the Google Play Developer Console is now providing, developers can get better insights into the performances of their variants and enhance their optimization accordingly.

Want more information regarding App Store Optimization? Contact Gummicube and we’ll help get your strategy started.

When is the App Store Holiday Schedule 2020? Learn about the dates of this year's shutdown and how to prepare.

Apple's App Store Guidelines have strict privacy requirements. Developers now must provide information to users on the App Store listing regarding the data they access.

The Google Play Developer Console has been updated with a new design and adjusted tools. What's different, and how will it impact App Store Optimization?